Part 2¶

Tutorial Part 2: Computer Vision with TransferLearning¶

Transfer Learning - storing knowledge gained while solving one problem and applying it to a different but related problem.

TensorFlow has good selection of pre-trained models that can be imported right in TensorFlow model. It is called TensorFlow Hub. As a compliment to main framework Google has published also additional package called TensorFlow Datasets which has collection of the most popular datasets.

In this part of tutorial we will use The Standford Dogs dataset imported through TensorFlow datasets.

Check if gpu is available and TF version¶

import tensorflow as tf

tf.__version__

'2.9.2'

!nvidia-smi

Mon Oct 24 16:53:07 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 460.32.03 Driver Version: 460.32.03 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 72C P0 30W / 70W | 14632MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

Get data¶

import tensorflow_datasets as tfds

(train_data, test_data), ds_info = tfds.load(name='stanford_dogs',

split=['train', 'test'],

shuffle_files=True,

as_supervised=True,

with_info=True,

batch_size=32)

ds_info

tfds.core.DatasetInfo(

name='stanford_dogs',

full_name='stanford_dogs/0.2.0',

description="""

The Stanford Dogs dataset contains images of 120 breeds of dogs from around

the world. This dataset has been built using images and annotation from

ImageNet for the task of fine-grained image categorization. There are

20,580 images, out of which 12,000 are used for training and 8580 for

testing. Class labels and bounding box annotations are provided

for all the 12,000 images.

""",

homepage='http://vision.stanford.edu/aditya86/ImageNetDogs/main.html',

data_path='~/tensorflow_datasets/stanford_dogs/0.2.0',

file_format=tfrecord,

download_size=778.12 MiB,

dataset_size=744.72 MiB,

features=FeaturesDict({

'image': Image(shape=(None, None, 3), dtype=tf.uint8),

'image/filename': Text(shape=(), dtype=tf.string),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=120),

'objects': Sequence({

'bbox': BBoxFeature(shape=(4,), dtype=tf.float32),

}),

}),

supervised_keys=('image', 'label'),

disable_shuffling=False,

splits={

'test': <SplitInfo num_examples=8580, num_shards=4>,

'train': <SplitInfo num_examples=12000, num_shards=4>,

},

citation="""@inproceedings{KhoslaYaoJayadevaprakashFeiFei_FGVC2011,

author = "Aditya Khosla and Nityananda Jayadevaprakash and Bangpeng Yao and

Li Fei-Fei",

title = "Novel Dataset for Fine-Grained Image Categorization",

booktitle = "First Workshop on Fine-Grained Visual Categorization,

IEEE Conference on Computer Vision and Pattern Recognition",

year = "2011",

month = "June",

address = "Colorado Springs, CO",

}

@inproceedings{imagenet_cvpr09,

AUTHOR = {Deng, J. and Dong, W. and Socher, R. and Li, L.-J. and

Li, K. and Fei-Fei, L.},

TITLE = {{ImageNet: A Large-Scale Hierarchical Image Database}},

BOOKTITLE = {CVPR09},

YEAR = {2009},

BIBSOURCE = "http://www.image-net.org/papers/imagenet_cvpr09.bib"}""",

)

train_data, test_data

(<PrefetchDataset element_spec=(TensorSpec(shape=(None, None, None, 3), dtype=tf.uint8, name=None), TensorSpec(shape=(None,), dtype=tf.int64, name=None))>, <PrefetchDataset element_spec=(TensorSpec(shape=(None, None, None, 3), dtype=tf.uint8, name=None), TensorSpec(shape=(None,), dtype=tf.int64, name=None))>)

Prepare data¶

Batch and prefetch¶

import tensorflow as tf

# train_data = train_data.batch(32).prefetch(tf.data.AUTOTUNE)

# test_data = test_data.batch(32).prefetch(tf.data.AUTOTUNE)

# TFDS already batched dataset for us

train_data = train_data.map(lambda image, label: (tf.image.resize(image, (224, 224)), label)).prefetch(tf.data.AUTOTUNE)

test_data = test_data.map(lambda image, label: (tf.image.resize(image, (224, 224)), label)).prefetch(tf.data.AUTOTUNE)

len(train_data), len(test_data)

(<PrefetchDataset element_spec=(TensorSpec(shape=(None, 224, 224, 3), dtype=tf.float32, name=None), TensorSpec(shape=(None,), dtype=tf.int64, name=None))>, <PrefetchDataset element_spec=(TensorSpec(shape=(None, 224, 224, 3), dtype=tf.float32, name=None), TensorSpec(shape=(None,), dtype=tf.int64, name=None))>)

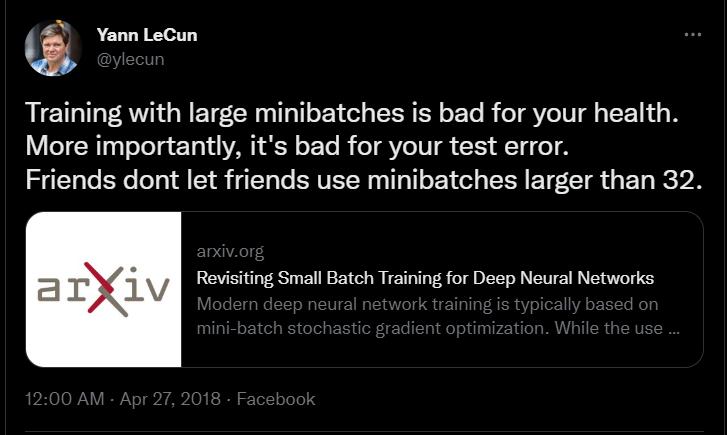

Note:

- Batch size - the number of samples that are passed to the network at once.

Revisiting Small Batch Training for deep Neural Networks paper

Revisiting Small Batch Training for deep Neural Networks paper - Prefetching overlaps the preprocessing and model execution of a training step.

On the step

s, the input pipeline is reading the data for steps+1.tf.data.AUTOTUNEtunes value dynamically at runtime.

Data Augmentation¶

Data Augmentation is important concept against overfitting problem.

- Data Augmentation - a technique to increase the diversity of your training set by applying random (but realistic) transformations, such as image rotation.

- Overfiting - concept in data science, which occurs when a statistical model fit exactly against its training data

# Build data augmentation layer

# Note: in TensorFlow models can be used as layers

from tensorflow.keras import layers

data_augmentation = tf.keras.models.Sequential([

layers.RandomHeight(0.2), # https://www.tensorflow.org/api_docs/python/tf/keras/layers/RandomHeight

layers.RandomWidth(0.2), # https://www.tensorflow.org/api_docs/python/tf/keras/layers/RandomWidth

layers.RandomFlip(), # https://www.tensorflow.org/api_docs/python/tf/keras/layers/RandomFlip

layers.RandomZoom(0.2), # https://www.tensorflow.org/api_docs/python/tf/keras/layers/RandomZoom

layers.RandomRotation(0.2) # https://www.tensorflow.org/api_docs/python/tf/keras/layers/RandomRotation

], name='data_augmentation')

Build model¶

Get pretrained model¶

Links:

base_model = tf.keras.applications.EfficientNetB0(include_top=False)

base_model.trainable = False # Freeze model's weigth

Callbacks¶

ModelCheckpoint- saves model or model weights at some frequency.EarlyStopping- stops training when a monitored metric has stopped improving.

checkpoint_callback = tf.keras.callbacks.ModelCheckpoint(filepath='model_checkpoints/checkpoint.ckpt',

save_weights_only=True,

save_best_only=True,

save_freq='epoch',

verbose=1)

early_stopping_callback = tf.keras.callbacks.EarlyStopping(monitor='val_loss',

verbose=1,

restore_best_weights=True,

patience=5)

Create a model with Functional API¶

inputs = tf.keras.layers.Input(shape=(224, 224, 3), name='input_layer')

x = data_augmentation(inputs)

x = base_model(x, training=False)

x = tf.keras.layers.GlobalAveragePooling2D(name='global_average_pooling_layer')(x)

outputs = tf.keras.layers.Dense(120, activation='softmax', name='output_layer')(x)

model = tf.keras.models.Model(inputs, outputs, name='cv_model')

model.summary()

Model: "cv_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_layer (InputLayer) [(None, 224, 224, 3)] 0

data_augmentation (Sequenti (None, 224, 224, 3) 0

al)

efficientnetb0 (Functional) (None, None, None, 1280) 4049571

global_average_pooling_laye (None, 1280) 0

r (GlobalAveragePooling2D)

output_layer (Dense) (None, 120) 153720

=================================================================

Total params: 4,203,291

Trainable params: 153,720

Non-trainable params: 4,049,571

_________________________________________________________________

model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(),

optimizer=tf.keras.optimizers.Adam(),

metrics=['accuracy'])

model.fit(train_data,

validation_data=test_data,

validation_steps=int(0.2*len(test_data)),

epochs=100,

callbacks=[early_stopping_callback,

checkpoint_callback],

verbose=1)

Epoch 1/100 375/375 [==============================] - ETA: 0s - loss: 3.8025 - accuracy: 0.1755 Epoch 1: val_loss improved from inf to 2.24280, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 89s 221ms/step - loss: 3.8025 - accuracy: 0.1755 - val_loss: 2.2428 - val_accuracy: 0.4900 Epoch 2/100 375/375 [==============================] - ETA: 0s - loss: 3.0469 - accuracy: 0.3007 Epoch 2: val_loss improved from 2.24280 to 1.78028, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 78s 206ms/step - loss: 3.0469 - accuracy: 0.3007 - val_loss: 1.7803 - val_accuracy: 0.5590 Epoch 3/100 375/375 [==============================] - ETA: 0s - loss: 2.8219 - accuracy: 0.3380 Epoch 3: val_loss improved from 1.78028 to 1.69082, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 76s 201ms/step - loss: 2.8219 - accuracy: 0.3380 - val_loss: 1.6908 - val_accuracy: 0.5696 Epoch 4/100 375/375 [==============================] - ETA: 0s - loss: 2.6891 - accuracy: 0.3624 Epoch 4: val_loss improved from 1.69082 to 1.65986, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 76s 201ms/step - loss: 2.6891 - accuracy: 0.3624 - val_loss: 1.6599 - val_accuracy: 0.5625 Epoch 5/100 375/375 [==============================] - ETA: 0s - loss: 2.6073 - accuracy: 0.3811 Epoch 5: val_loss improved from 1.65986 to 1.56977, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 74s 196ms/step - loss: 2.6073 - accuracy: 0.3811 - val_loss: 1.5698 - val_accuracy: 0.5784 Epoch 6/100 375/375 [==============================] - ETA: 0s - loss: 2.5088 - accuracy: 0.4027 Epoch 6: val_loss did not improve from 1.56977 375/375 [==============================] - 70s 186ms/step - loss: 2.5088 - accuracy: 0.4027 - val_loss: 1.5869 - val_accuracy: 0.5749 Epoch 7/100 375/375 [==============================] - ETA: 0s - loss: 2.4902 - accuracy: 0.4025 Epoch 7: val_loss improved from 1.56977 to 1.55353, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 69s 184ms/step - loss: 2.4902 - accuracy: 0.4025 - val_loss: 1.5535 - val_accuracy: 0.5784 Epoch 8/100 375/375 [==============================] - ETA: 0s - loss: 2.3965 - accuracy: 0.4259 Epoch 8: val_loss improved from 1.55353 to 1.55162, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 69s 182ms/step - loss: 2.3965 - accuracy: 0.4259 - val_loss: 1.5516 - val_accuracy: 0.5831 Epoch 9/100 375/375 [==============================] - ETA: 0s - loss: 2.3380 - accuracy: 0.4368 Epoch 9: val_loss improved from 1.55162 to 1.50542, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 69s 184ms/step - loss: 2.3380 - accuracy: 0.4368 - val_loss: 1.5054 - val_accuracy: 0.6061 Epoch 10/100 375/375 [==============================] - ETA: 0s - loss: 2.4117 - accuracy: 0.4186 Epoch 10: val_loss did not improve from 1.50542 375/375 [==============================] - 67s 179ms/step - loss: 2.4117 - accuracy: 0.4186 - val_loss: 1.5490 - val_accuracy: 0.5831 Epoch 11/100 375/375 [==============================] - ETA: 0s - loss: 2.2914 - accuracy: 0.4478 Epoch 11: val_loss did not improve from 1.50542 375/375 [==============================] - 65s 173ms/step - loss: 2.2914 - accuracy: 0.4478 - val_loss: 1.5647 - val_accuracy: 0.5896 Epoch 12/100 375/375 [==============================] - ETA: 0s - loss: 2.2642 - accuracy: 0.4505 Epoch 12: val_loss did not improve from 1.50542 375/375 [==============================] - 64s 171ms/step - loss: 2.2642 - accuracy: 0.4505 - val_loss: 1.5737 - val_accuracy: 0.5837 Epoch 13/100 375/375 [==============================] - ETA: 0s - loss: 2.2836 - accuracy: 0.4470 Epoch 13: val_loss did not improve from 1.50542 375/375 [==============================] - 65s 172ms/step - loss: 2.2836 - accuracy: 0.4470 - val_loss: 1.5595 - val_accuracy: 0.5825 Epoch 14/100 375/375 [==============================] - ETA: 0s - loss: 2.2200 - accuracy: 0.4554 Epoch 14: val_loss improved from 1.50542 to 1.49176, saving model to model_checkpoints/checkpoint.ckpt 375/375 [==============================] - 63s 168ms/step - loss: 2.2200 - accuracy: 0.4554 - val_loss: 1.4918 - val_accuracy: 0.6002 Epoch 15/100 375/375 [==============================] - ETA: 0s - loss: 2.2305 - accuracy: 0.4529 Epoch 15: val_loss did not improve from 1.49176 375/375 [==============================] - 64s 170ms/step - loss: 2.2305 - accuracy: 0.4529 - val_loss: 1.5279 - val_accuracy: 0.5973 Epoch 16/100 375/375 [==============================] - ETA: 0s - loss: 2.2025 - accuracy: 0.4647 Epoch 16: val_loss did not improve from 1.49176 375/375 [==============================] - 64s 171ms/step - loss: 2.2025 - accuracy: 0.4647 - val_loss: 1.5671 - val_accuracy: 0.5879 Epoch 17/100 375/375 [==============================] - ETA: 0s - loss: 2.2152 - accuracy: 0.4616 Epoch 17: val_loss did not improve from 1.49176 375/375 [==============================] - 64s 170ms/step - loss: 2.2152 - accuracy: 0.4616 - val_loss: 1.4943 - val_accuracy: 0.6008 Epoch 18/100 375/375 [==============================] - ETA: 0s - loss: 2.1761 - accuracy: 0.4733 Epoch 18: val_loss did not improve from 1.49176 375/375 [==============================] - 62s 166ms/step - loss: 2.1761 - accuracy: 0.4733 - val_loss: 1.5502 - val_accuracy: 0.5949 Epoch 19/100 375/375 [==============================] - ETA: 0s - loss: 2.1318 - accuracy: 0.4801Restoring model weights from the end of the best epoch: 14. Epoch 19: val_loss did not improve from 1.49176 375/375 [==============================] - 63s 169ms/step - loss: 2.1318 - accuracy: 0.4801 - val_loss: 1.5312 - val_accuracy: 0.5973 Epoch 19: early stopping

<keras.callbacks.History at 0x7fb200340310>

model.evaluate(test_data) # Not the best practice, validation dataset should be used here

269/269 [==============================] - 27s 102ms/step - loss: 1.5423 - accuracy: 0.5885

[1.5422791242599487, 0.5884615182876587]

How we can improve our model?¶

- Get more data

- Try different architecture (more complex or simpler)

- Train for Longer

So if you want reach good results - experiment, experiment, experiment! Good luck!

More model examples:¶

One more example of Computer Vision classifier can be found here with 99.27% accuracy, AlexNet (Convolutional Neural Network (CNN)).

Creating image tf.data.Dataset from folders with images can be found there as well. More information.